Is there really a place for Municipal FTTH, if municipal-purpose is really founded on the affording of jurisdiction-wide opportunity, but not on validation by profit? And if there is, is there a way for that municipality to avoid the conflicting interests of the complex layers of execution required in the full-stack of a FTTH network, operations, and retail services?

YES, and yes.

The larger municipalities would be well served to participate on the layer that it knows best, which is infrastructure. I say "knows best" in context to the complexity of services delivered within the most retail-customer-facing side. It is similar to the complexity of the services and upshots of roads, but dissimilar to the relative simplicity of electricity and water upshots at the retail demarcation point.

That FTTH infrastructure layer, which would provide opportunity across all four municipal value-chains mentioned above, is also mitigated by the full deployment having many more possibilities of value realization, than proportionate deployment based on priorities or demands of only one or two value-chains. A full commitment to a full FTTeverything deployment is a set-it-and-forget-it for 40-60 years solution. Not one that is only an immediate bandage, nor one that is overkill, but rather a finite policy on connectedness being as basic a citizen need as clean water, sewer, garbage pickup, roads, electricity, and bylaws.

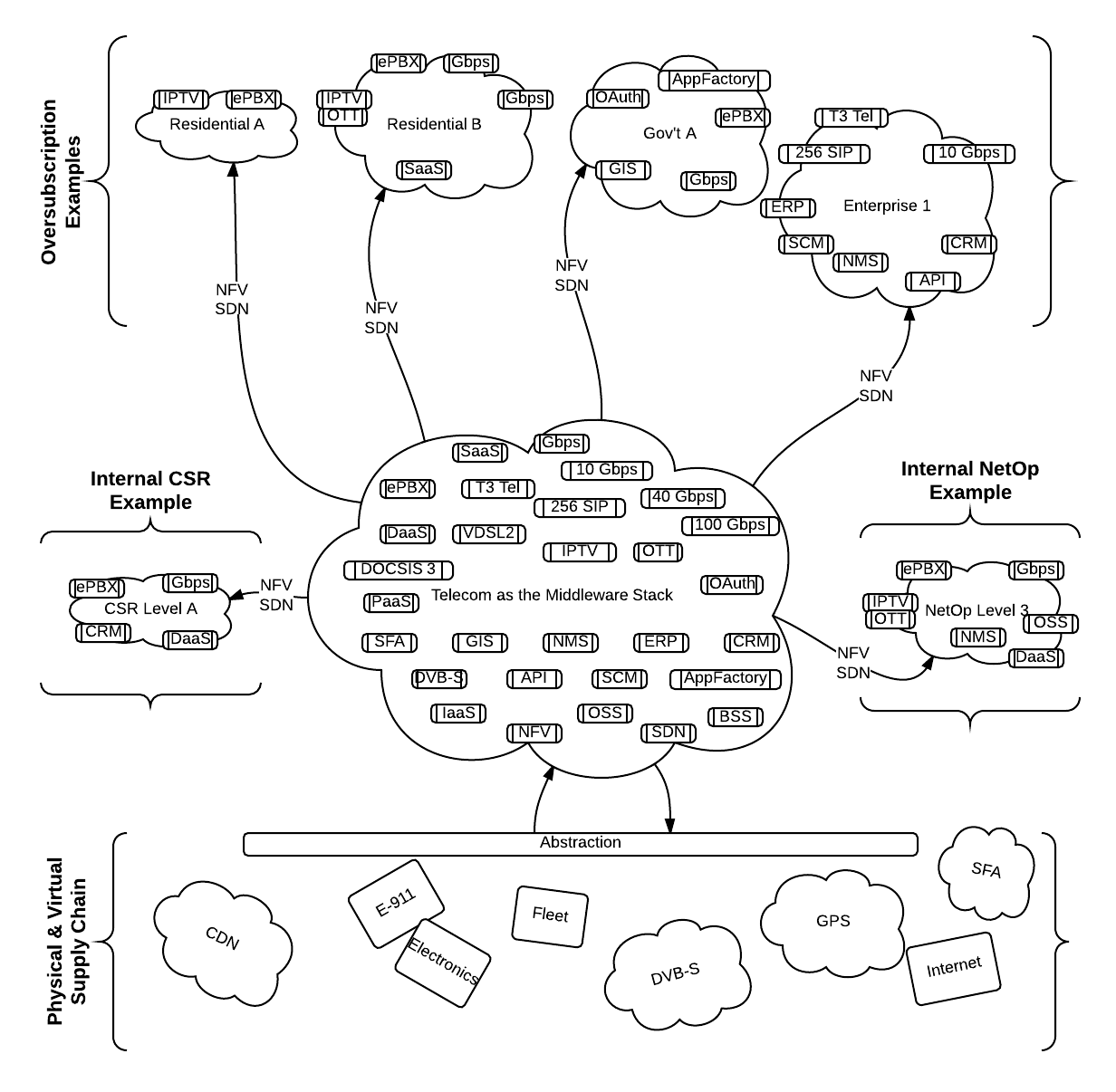

In my opinion, the Municipal FTTH space must demarcate as the fibre asset in the ground up to the premises edge termination point. This layer boundary is referred to as the NetCo. There is a possibility to extend the NetCo mandate through the other layers (OpCo and NetCo) with a soft demarcation point within each premises, providing basic internet at the minimum standards set federally within its definition of "highspeed" with zero bells and whistles. The latter half is not a contradiction, it is a base-level service for a jurisdiction-wide option of opportunity, but also a possible key change-agent. Contact Lightcore Group for complete details on the deployment and risks analysis in your municipality, today.

Beyond the NetCo is the network operator that manages and enforces policy on the network to the Retail Service Providers (RSPs). There is no consideration for net-neutrality here other than that non-neutral is a business model, and I fundamentally believe that private-industry business-models should not be regulated, but rather that if there is fundamental belief in net-neutrality business-models than government may need to look at ways of incentivizing private-industry to grow in that direction, organically or naturally. The same way that solar, wind, and bio-fuel energy-sources required heavy subsidization (development capital, grants, and otherwise) to become available in the market as an option, so too might net-neutrality-based business-models need subsidizing, not unfair advantage, upfront.

There needs to be clear delineation between the one or more RSP's and the NetCo, and that is the role of the OpCo(s). The OpCo(s) is a gateway and gatekeeper for RSP's to on-board and compete openly, without the ability of the RSP to influence the municipality with direct-lobbying channels, unfair contracting-mishaps, or unfavourable connection between "government program" and "retail services". The OpCo(s) has been suggested by some to be a good fit for a non-profit. I fundamentally disagree. Simply because the purpose of the OpCo(s) is to entice as much competition as possible without the politics, turn-over, or personal-passions getting in the way.

In an ideal world, the OpCo(s) would be robots, or fixed-fee 5-year tendered contracts for up to [the maximum connections to each single premises, minus one] wholesale management companies (which of course would be a mix of interest groups, incumbent subsidiaries, and market entrants), but a mix of interest options would make sense.

On the Retail Service Provider layer, it is very complex, full of regulatory oversight, and content-owner control. Each retail provider should be able to choose its OpCo at will, in this model, in the hopes of driving up innovation, collaboration, and not collusion between the OpCo(s).

What about the smaller communities? Is there something that they could do even affordable?

YES, and kind-of.

In a smaller market than 100K, and looking at a new FTTH initiative, as laid out above, is not going to work very well. Functionally, it can be deployed without issue, but that "technical feasibility" is only 20% of the whole picture.

When Lightcore Group looks at feasibility, we break it down into five distinct categories, and then build them back up inter-vetted and intertwined into a solid statement of feasibility.

In short summary, our municipal FTTH feasibility method is acronym'ed S.T.E.L.E.

- Social Feasibility

- Technical Feasibility

- Economic Feasibility

- Legal Feasibility; and

- Environmental Feasibility

The truth is that launching an ISP in any municipality of any size is generally going to be facing a large uphill battle against bundled services from other providers.